* Cardinality: The variables with big numbers dominate the variables with smaller number categories. It is true at tree-based algorithms.

* Rare Labels: It represents the operational problems. Because they are rare. These rare labels appear in the training data set or test data set. So, if it is present in your observation, you may need to add additional steps in model deployment.

* The categorical variable may have the presence of string, but we need numbers in ML When using the Scikit-learn models.

The third consideration is the distribution of the variable for the numerical variable. Specifically, it is a gaussian distribution or skewed, then selecting the feature that the ML can use.

Outliers are usual or unexpected values in a variable that are extremely high or extremely low compared to all other variables. The magnitude of the features affects the model performance. For ex, In the house price prediction, one variable is the area in terms of sq.km and the other variable is the no. of rooms that vary from 1 to 10. In a Linear model, the variable that takes a higher value will have the predominant role over the house pricing. In this case, the area variable is more important to determine the price of the house, however, no. of rooms also place an important role. So, the algorithms are sensitive to scale. The algorithms that are sensitive to the magnitude are,

* Linear and Logistic Regression

* Neural Networks

* Support Vector Machines

* KNN

* K-means clustering

* Linear Discriminant Analysis(LDA)

* Principal Component Analysis(PCA)

Solutions for Feature Engineering Problems: A variety of techniques is used to resolve feature engineering problems. In case of missing data, categorical labels, distributions, and outliers, we need to perform the transformation of variables.

* In missing data imputation, we can use mean/median imputation or arbitrary value imputation.

* In case of categorical variables, add the missing category or missing indicator.

* In case of a distribution, there are 2 approaches. The mathematical transformations for skewed to Gausssian, and the variable transformations are logarithmic, reciprocal, exponential etc.,

* In Outliers, we need to perform discretization or truncation in the dataset. For Discretisation in an unsupervised model, the equal-width, equal-frequency, k means techniques are used. Similarly, Decision trees are used in the supervised model.

If the data are an image, text, time series, and distance, we need to extract the new or create features from these data to feed to our models. In case of text, we need to count the characters, words, lexical diversity, sentences, paragraphs, TF-IDF features. So, these are the transformations used before transfer to ML model.

Feature Selection: Feature selection means finding those variables that are the most predictive ones and building the models using those variables for the entire dataset. This is the process to identify the predictive feature. So, we select the features of,

* Simple models are easier to interpret by the consumers. It means models built with 10 features are easier to understand than models built with 100 features. Also, It reduces the risk of data errors and data redundancy

* Shorter Training Times

* Models built with fewer features are easier to implement or integrate with business systems by software developers and easier to put into production. Because of smaller JSON messages sent to/from with business systems and models. JSON messages contain the variables or inputs to the model and take the prediction out to the system. Also, it has less code and handles potential errors.

Feature Selection Methods:

* Filter Method: It is a simple statistical method. These methods are independent of the ML algorihms to build at the end. It is based only on the variable characteristics. So, it has the advantage of quick removal of junk of features(particularly the loss of variable) and it is model agnostic. It means the selective features are suitable for any algorithms and fast computation. But, it has the disadvantage that the model does not capture redundancy and feature interaction.

* Wrapper Method: It takes the algorithm intended to build when selecting the feature. It does not evaluate one feature at a time but evaluates the group of features. The advantage of this method is that considers the feature interaction. But it has the disadvantage of this method is not a model agnostic.

* Embedded Method: It is a feature selection procedure during the training of ML algorithms. The loss of regression is an example of this method. It captures the feature interaction and has good model performance. It is faster than the wrapper method but it is not model agnostic.

Finally, In the Model Building, we check the various algorithms and analyze the performance to choose the one that produces the best results. We evaluate the statistical metrics like mean square error for regression, accuracy etc., In business, We need to evaluate the statistical metrics relying on the business value. For ex, if we build a model for advertising, we can evaluate the model that bring the no. of new customers while using the model. There are several models can be built like,

* Linear Models or Logistic Regression (MARS)

* Tree Models or Random Forests(Gradient Boosted Trees)

* Neural Networks for a supervised model

* Clustering Algorithm

The metrics used to measure the performance of the models are ROC-AUC for the probability value of how many times the model made a good assessment and wrong assessment. The mean square error or Root mean square error are used for linear models.

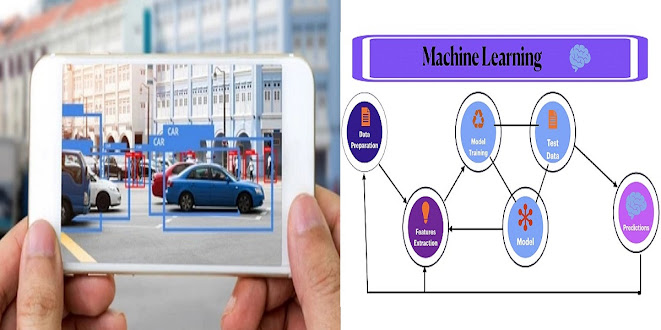

Programming in Machine Learning Models: In the ML pipeline, we used to learn and transform the data or learn and predict something from the data. In the different steps of procedural programming, we used to learn the parameters from data and use those parameters to make the transformations or predictions. The data stores the parameters like mean values, regression coefficient, or lasso regression models. In object-oriented programming, the classes are used and write code in the form of objects for feature engineering. These objects can store data(or attributes or properties) and instructions or procedures(methods) to modify that data or obtain the predictions. In ML model, the objects can learn and store parameters and are automatically refreshed every time the model is retrained. It has Fit and Transforms methods. The Fit method learns the parameters and the Transform method transforms data with the learned parameters.

Once we are satisfied with the result, we can go for Model Deployment. The deployment of the model means the model in the cloud should take the data that transforms the variables and select variables, using the trained model and obtain the prediction. So, our in-house software will be versioned for each ML system that is tested and installed across the systems for the expected outputs so that it will minimize the deployment time. When you deploy the CNN that was trained on a large dataset and that data makes a prediction. Normally, Big data are used for analytics and ML. It is a large volume of data to be structured, semi-structured, or unstructured. In business, big data is a text that has large documents or images or complex Time Series that need to be resolved. So, we build the deep learning model with various layers and classes of parameters to be determined. It normally relies on GPUs and neural networks that can be extremely heavy to compute.

ML System Architecture: There are 4 architecture approaches for ML systems. Those are

1. Model Embedded in an application

2. Served via Dedicated Service

3. Model published as data(Streaming)

4. Offline Prediction

When you serve the ML models, there are different file formats. So, you need to serialize the model object with a python pickle module. Open-source tools like MLFlow provides the common serialization format for exporting Spark, Scikit-learn, and TensorFlow models. There is also language agnostic formats to share the models.

In the Embedded Approach, the trained model is embedded as the dependency of our application. To ensure, we install the application using the pip install <mymodel> or the trained model into the build time by file storage like AWS S3. So, the application can able to make predictions on the fly. For ex, In a single Django application, the html page has the form and when you submit the form, the Django app takes the input to produce the prediction. In the case of a mobile app, it has the native ML app library and performs the prediction on the device.

In the Dedicated Service Approach, the trained model architecture has a separate ML service. For ex, when the form is submitted by the Django app that server makes the second call to the dedicated microservice. This microservice is responsible for the prediction. So, Django makes the call via REST or gRPC or SOAP message architecture. Here, the model deployment is separate from the main application.

Serve the Model via REST API: The model prediction is done through the REST API. It is very simple to put a server that will transfer to the client a representation of the state of a requested resource. In our case, the requested resource is the prediction from the model to the client. The client could be the website or mobile device. REST API has the potential to combine multiple models at different API endpoints. It is easy to scale by adding more instances of the API application behind a load balancer as well.

The Model Published as Data approach leveraging the streaming platform like Apache Kafka or Apache Pulsar. In this process, the training process publishes the model through a streaming platform. The application consumes the model at runtime and enables any model update. For ex, the Django application can consume the dedicated Kafka topic where the new version of the models will be published. Here, there is no need to deploy and seamlessly upgrade the models.

The Offline Predictions is a asynchronous design. The Predictions are triggered and run asynchronously via application or scheduled job. After a few hours or days, the predictions are collected into the database or some form of storage via dashboard or reports. So there is a trade-off between the systems and requirements for building the ML architecture.

Comments

Post a Comment

Thanks for your comments and we are moderating your comments for publishing in our blog post.